Proj 3 - IMAGE WARPING and MOSAICING

Proj 3A: Manual Point Selection

Shoot the Pictures & Select Points

As we learn from the lecture, one key part of warping is that we need a set of points as evidence for the Homography matrix. In my code implementation, I use plt.ginput function to select sets of points who have corresponding orders.

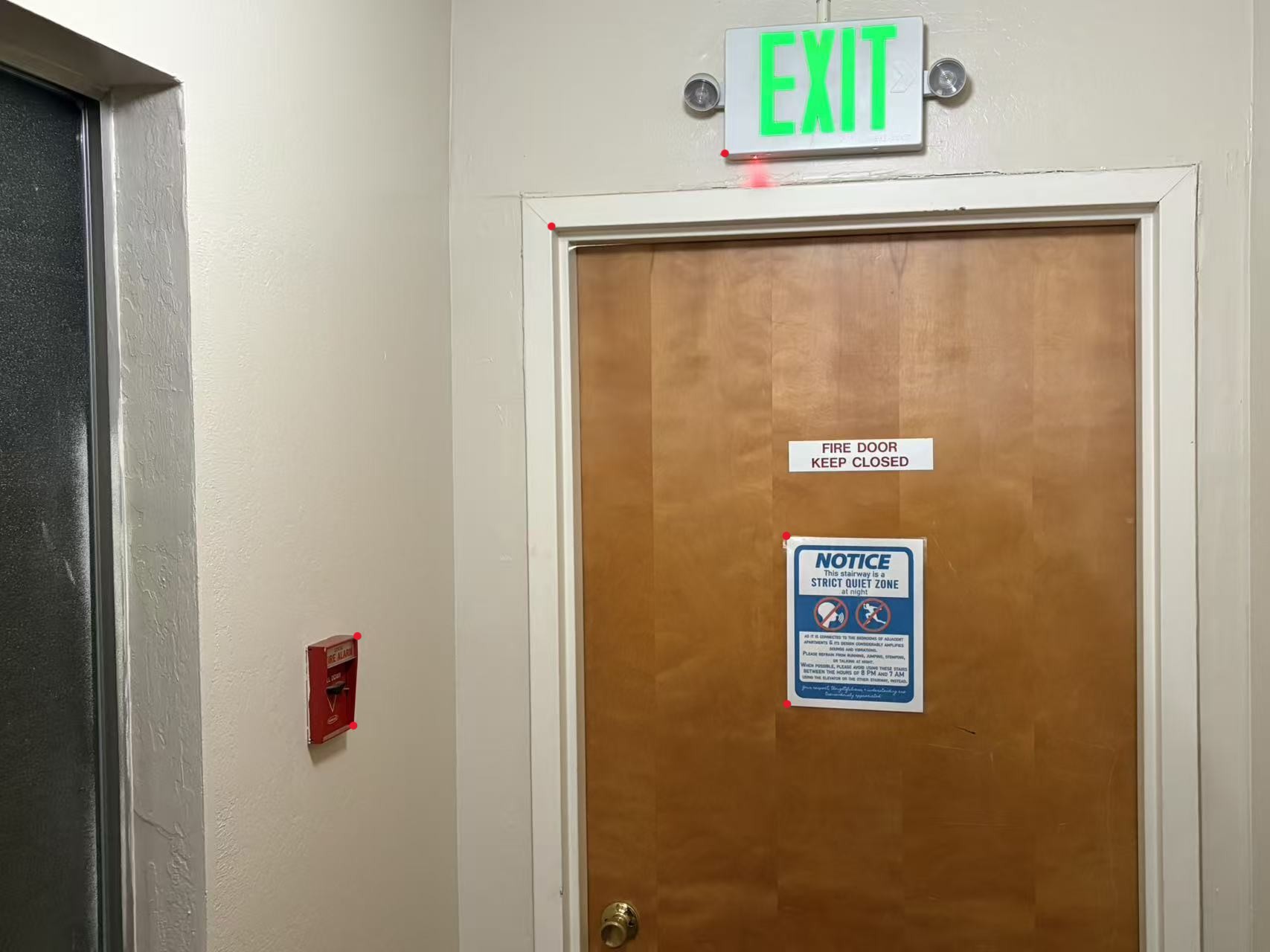

In my final implementation, I can stitch three pictures together. I shoot the photos following the requirement of fixing center of projection and rotate the camera only. They are as follows:

Recover Homographies

With the points obtained, my first thought is to use the optimization technique (machine learning method): where: But this method can be problematic: the right-bottom entry of \(H\) is not guaranteed to be 1! In another word, this constraint is difficult to be expressed in optimization problem. If I divide \(H\) by \(h_{33}\), then objective L2 norm can change w.r.t. difference \(h_{33}\)! The project website hints that the dimension of freedom of \(H\) is 8, therefore I will expand the equations manually and try to seek for more inspiration. Suppose for a pair of corresponding points \((xp_w \ yp_w \ w), (x \ y \ 1)\):

\((xp_w \ yp_w \ w)\) match the original coordinate \(xp, yp\)

Then we can expand the equations: Then convert back to the two dimension coordinates: From our objectives above, we can tell that even all entries are divided by \(h_{33}\), the formulas still hold true! We can re-write as: We can construct: Note that in the scenario above, I only consider one point. Matrix \(A\) can stack \(n\) times, forming a \(\mathbf{A} \in R^{2n\times9}\) matrix. The constraint is \(||h||_2^2=1\). Our goal is now: If we SVD matric \(A\), i.e., \(\mathbf{A} = \mathbf{U} \mathbf{S} \mathbf{V}^T\), then (the norm we are using is L2): Note that \(\mathbf{S}\) is a matrix consisting singular values. Note that since \(2n>9\), then there are more rows than columns, which means that there are rows on the bottom which are filled with 0! For column vector \(\mathbf{V}^T\mathbf{h}\), its norm is a fixed 1, so we can simply try to set only the last element as 1 while the others are 0! In another word, we want: So our optimal \(h\) can be the last row of \(\mathbf{V}\)! After that, I can divide all entries with the \(h_{33}\). And after assembling them together, we get the homography matrix.

After the class and edstem post in the following week, I realizes that I can set \(h_{33}\) to 1 and solve the equations via np.linalg.lstsq. So the problem formulation is now as follows, and can be easily solved by the np.linalg.lstsq:

For the deliverable correspondences visualized on the images and recovered homography matrix, please refer to them in the Warp the Images section below.

Warp the Images

Since the homography matrix has been obtained, then to warp the image, I have to first calculate the overall size of the expected image and then calculate the value of pixels using Bilinear or Nearest Neighbor technique. Note that the actual place for the center of the pixel is actually: \((row+0.5, col+0.5)\)

After calculating the overall size of the expected image, this overall shape actually undergoes two stage from the picture that is going to be warped: first warp, and then translate. The translation can be cause when there are negative warped pixel positions. The overall area will be applied the inverse of \(Translation * H\), and source from the warped original picture with Bilinear or Nearest Neighbor technique taught in the lecture.

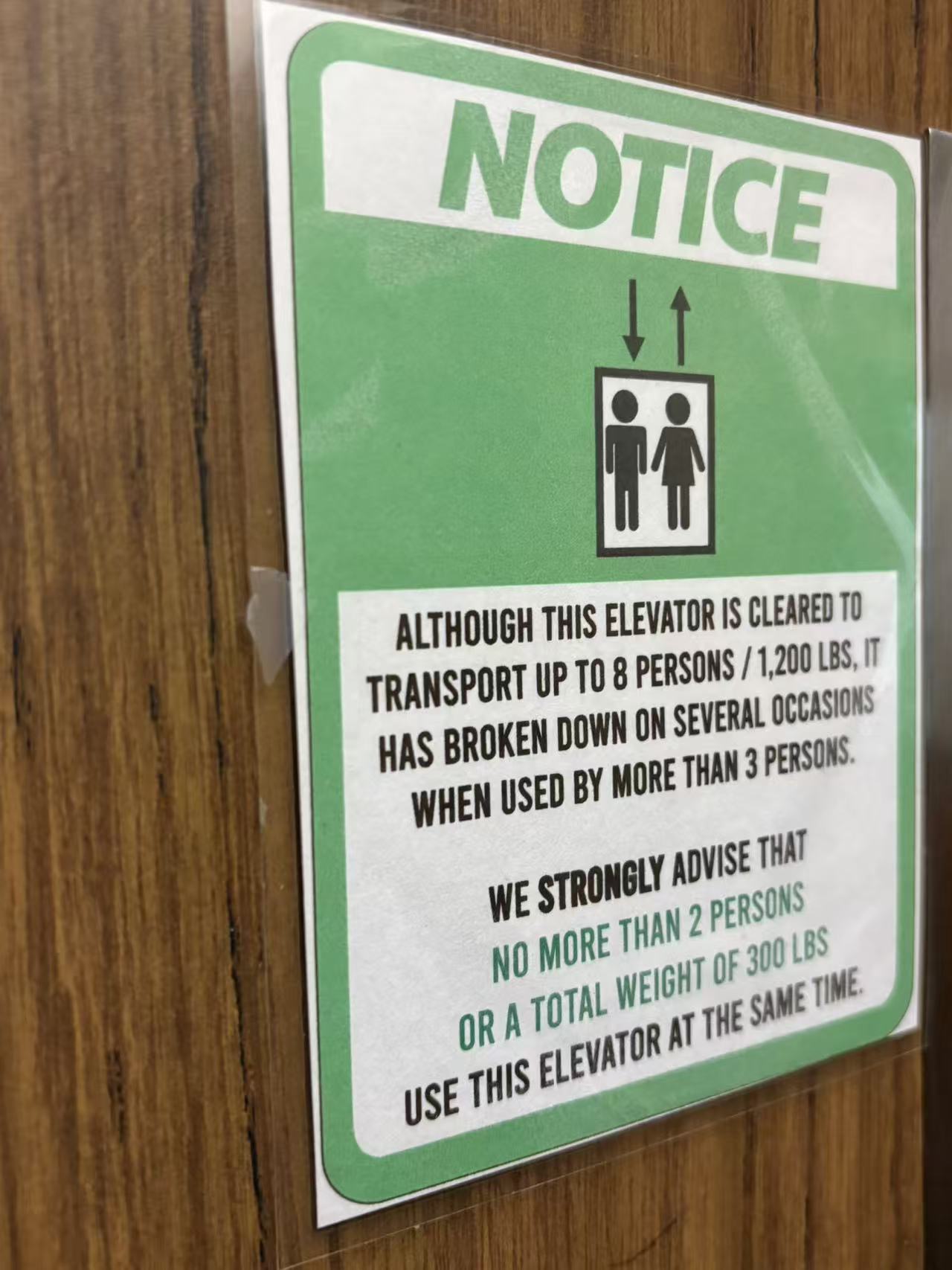

Now I will test the Rectification Part: I input a picture, create a blank rectangle with the same size, and pick rectangle points to align. (In another word, I replace the picture to be stitched as a blank one, to demonstrate that my warping is safe and sound. This is helpful for sanity check and debugging). The results are as follows:

From left to right: original, bilinear, nearest neighbor

Homography Matrix:

[[ 5.07951563e-01 -3.32904310e-02 2.15065395e+00]

[-1.95431423e-01 5.91330231e-01 2.49431655e+02]

[-3.02312517e-04 -3.34458470e-05 1.00000000e+00]]

Homography Matrix:

[[ 5.04835194e-01 -3.73939833e-02 1.16087680e+01]]

[-1.81158560e-01 5.83232691e-01 2.45054643e+02]

[-2.86528153e-04 -4.52234239e-05 1.00000000e+00]]

Here we can see that for the sign and picture with obvious rectangle frame in the real world, I successfully reshape them into the real rectangle in the warped figure, and the warped figure is correct. As for the comparison between Bilinear and NearestNeighbor, I zoom in the 'elevator man and woman part' in the first picture gallery and get the following result:

Left: Bilinear; Right: NearestNeighbor

We can tell that Bilinear is better in figure quality as NearestNeighbor has notable aliasing phenomenon. At the same time, Bilinear costs slightly more time.

Blend the Images into a Mosaic

Now I have the warped images and the overall size of resulting image. Since I've considered the translation issue in Recover Homography section, now all the warped images are in the right place. Note that the unwarped image (which is the middle one of the three pictures) will be translated as well to move to the right place. And I can get the overall 'panorama canvas' shape. I will create a pure black (zero-value) overall canvas, and put three warped and translated figures on that.

Originally I try the simplest blend: for the overlap section, take the average of them. For example, for pixels that are covered by 2 images, then I simply take the average of the value of these two figures. The result picture gallery is as below:

The correspondence points are marked in the figures. Red Points: Left two pictures; Purple Points: Right two pictures

I can see the clear edges of these warp and unwarped figures! Now I will use the feather technique. Under the help of cv2.cvtColor and cv2.distanceTransform function, for each warped individual figure, I can generate the weight mask. The algorithm will calculate the every non-zero (non-black) pixel's nearest distance to an edge, and I will utilize this to generate weight. The center of the warped un-black part has the largest distance, so I will divide all numerical value with center's max value for normalization. Then for one pixel covered by \(t\) figures \(f\), and there corresponding weights are \(w\), then:

Of course, if a pixel is not covered by any figure, then its value is set to 0. The pictures gallery of the feather algorithm is as follows:

For the two Homography Matrix listed: The upper one: Left two pictures; The lower one: Right two pictures

Homography Matrix:

[[ 3.06122050e+00 4.39113598e-02 -2.88814588e+03]

[ 7.34412673e-01 2.59447733e+00 -9.75025092e+02]

[ 1.17792019e-03 4.44158753e-05 1.00000000e+00]]

[[ 1.35717752e+00 2.18071555e-02 -6.27664041e+02]

[ 1.27211584e-01 1.24211746e+00 -1.52353887e+02]

[ 1.94604341e-04 3.17155520e-05 1.00000000e+00]]

Homography Matrix:

[[ 1.30102930e+00 8.18834656e-03 -5.89189943e+02]

[ 1.10316917e-01 1.19378131e+00 -1.29417538e+02]

[ 1.67001464e-04 1.10099768e-05 1.00000000e+00]]

[[ 8.34409707e-01 1.15687740e-02 3.25353039e+02]

[-6.43627649e-02 9.37872425e-01 3.11531613e+01]

[-9.49545824e-05 5.08008439e-06 1.00000000e+00]]

Homography Matrix:

[[ 1.60309551e+00 3.36832881e-02 -6.75362213e+02]

[ 2.67356906e-01 1.30042620e+00 -3.13023101e+02]

[ 5.60953866e-04 4.16810563e-05 1.00000000e+00]]

[[ 6.23014666e-01 1.00131026e-03 2.55555207e+02]

[-1.44890682e-01 8.32506764e-01 8.57408488e+01]

[-2.75996624e-04 -3.28634124e-06 1.00000000e+00]]

Now the edge artifact phenomenon is largely eased under the influence of feathering algorithm.

Part 3B: Auto Point Selection and Stitching

Harris Corner Detection

The basic idea: We should easily recognize the point by looking through a small window Shifting a window in any direction should give a large change in intensity. That's why we want to use harris corner as feature.

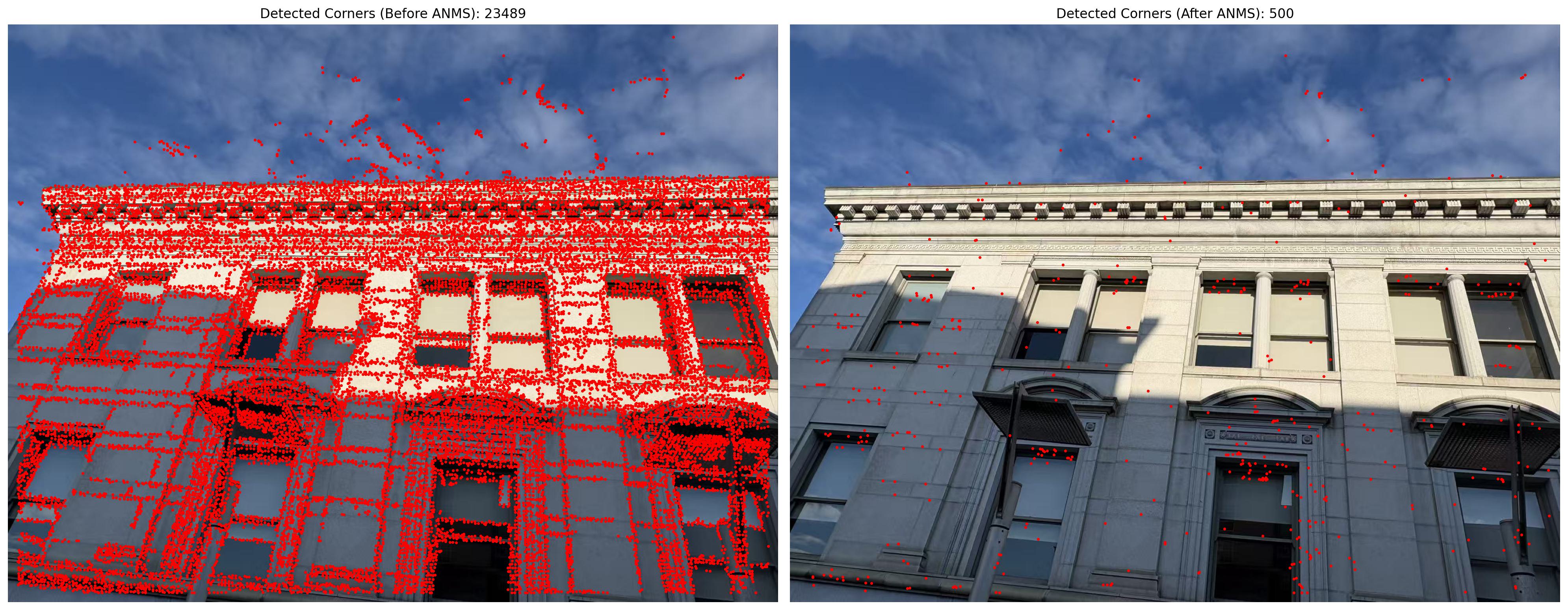

After getting the Harris Corners, it is desirable to restrict the maximum number of interest points extracted from each image. At the same time, it is important that interest points are spatially well distributed over the image, since for image stitching applications, the area of overlap between a pair of images may be small. To satisfy these requirements, we have developed a novel adaptive non-maximal suppression (ANMS) strategy to select a fixed number of interest points from each image. [1]

According to the paper, the minimum suppression radius \(r_i\) is given by: We select the \(n_{ip} = 500\) interest points with the largest values of \(r_i\). [1]

Also, I've noticed that there can be harris corner points who contain little texture, which can be disturbing especially for pictures with rather proportion of plain texture. So I add a filter to discard harris corners whose surrounding texture is not rich enough (i.e., surrounding patch's std should be greater than a threshold). [2] According to my naive experiment, this can be helpful.

The deliverable visualization of example (before and after ANMS) is presented below:

[1] “Multi-Image Matching using Multi-Scale Oriented Patches” by Brown et al.

[2] This solution is inspired by Gemini 2.5 Pro.

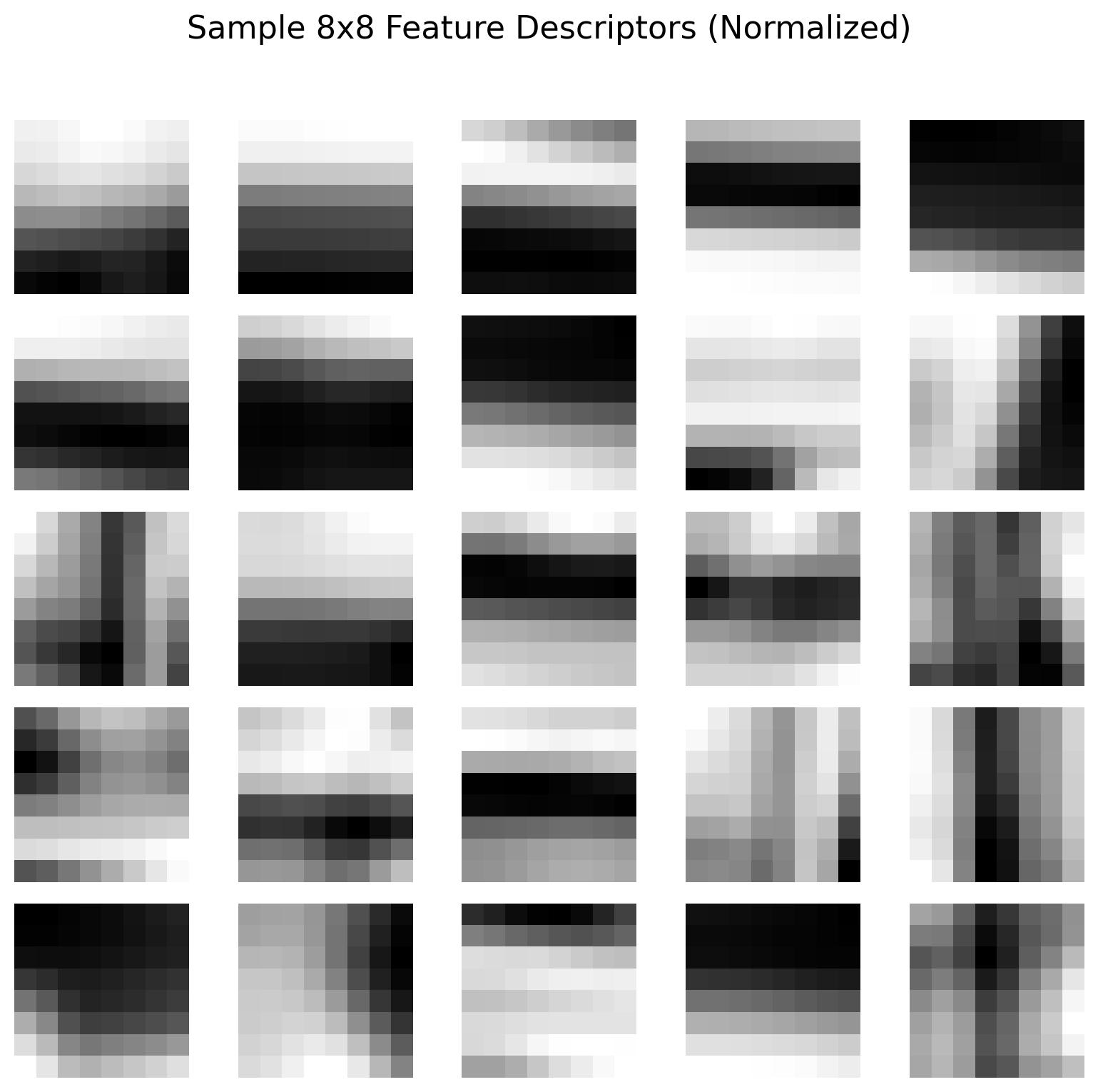

Feature Descriptor Extraction

We use descriptor feature to match the harris corner points in the previous step [3]. I use the same setting in the slide. For every point of interest, I take a square frame with length of 40 and downsample it to 8\(\times\)8, blur it with Gaussian, and then do bias normalization to it.

[3] [Brown, Szeliski, Winder, CVPR’2005]

The example descriptors visualization is as below:

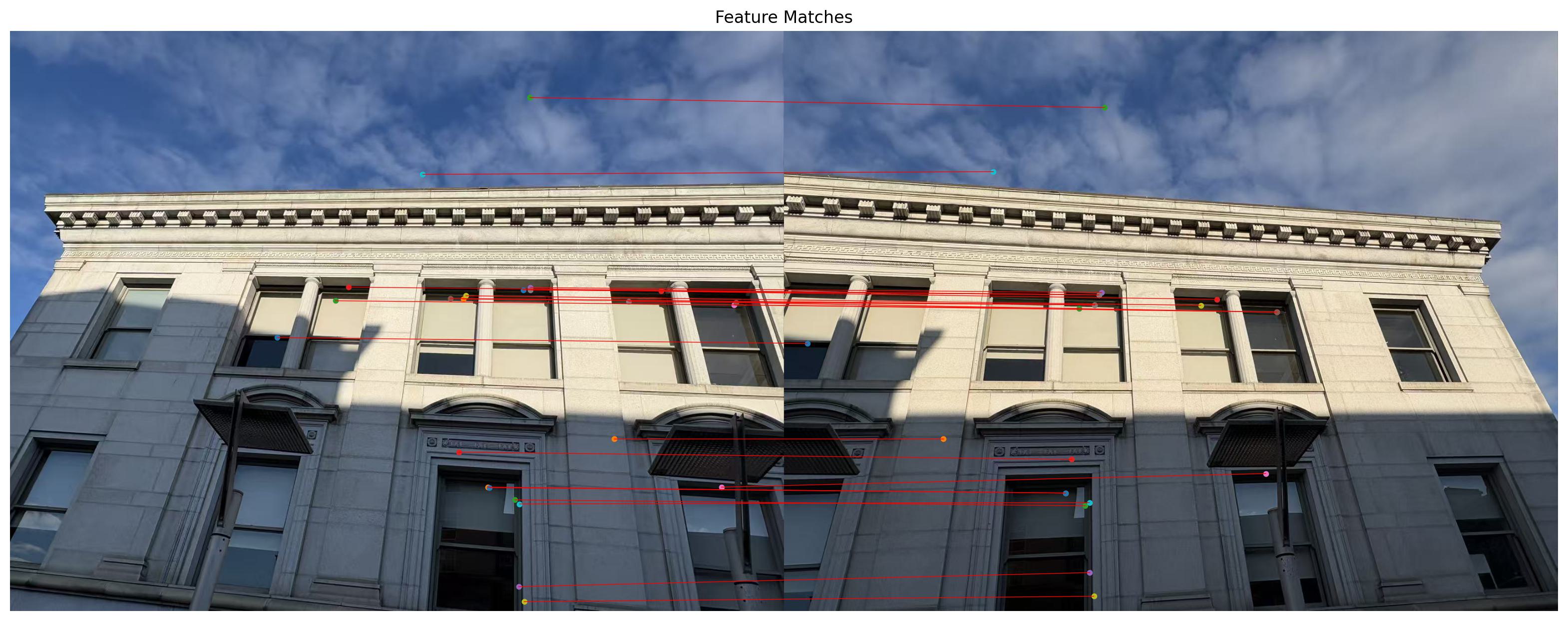

Feature Matching

The matching metric is evaluated, in this implementation, as suggested in harris.py, via Euclidean Distance. Two corners with smaller Euclidean Distance will be considered to match each other more.

Lowe’s trick is also applied to enhance robustness. Lowe’s technique works by assuming that the 1-NN in some image is a potential correct match, whilst the 2-NN in that same image is an incorrect match. In fact, we have observed that the distance in feature space of the 2-NN and subsequent matches is almost constant. [1]

[1] “Multi-Image Matching using Multi-Scale Oriented Patches” by Brown et al.

Visualization example of matched features between image pairs is as below:

RANSAC for Robust Homography

RANSAC loop [4]:

- Select four feature pairs (at random)

- Compute homography H (exact)

- Compute inliers where \(dist(pi’, H pi ) < ε\)

- Keep largest set of inliers

- Re-compute least-squares H estimate on all of the inliers

[4] CS180 slides: feature-alignmentPart2.pdf

OK, now it is all set. The pictures gallery is as follows:

Raw Images:

AutoStitching v.s. Manual Stitching:

Some observations and thoughts

I switched my images compared to part3A. Through some naive experiments, I noticed that:

- The images with rather proportion of plain area (with little texture) can suffer from mismatch

- A picture with suitable white balance (like avoid Highlights in image) can be better

- Avoid symmetric object in the building (at least in the figure)

For the first and second point, I guess that plain areas can be confusing for the algorithm. A picture with rich texture can have more rich descriptors. Though the filter and lowe's trick is designed to deal with them, it is better to ease this phenomenon. For the third point, it is possible (though with very low probability) that two symmetric point in the two figures can have the satisfying matches (four combinations), bringing confusion to the algorithm.

For the reasons above and experiments, I changed the images used in part3B.